Reflection choices and rituals that put AI to work as a teammate

Table of contents

December offers a rare pause—a pocket of time when teams naturally slow down, look back, and look ahead. It’s tempting to use that time to draft resolutions or curate highlight reels. This year, try something bolder: use the moment to move AI from the edges of your work to the operating core. Many teams still treat AI like a novelty or a personal productivity boost—handy at transcribing notes or drafting emails, useful in rare bursts, invisible to the rituals that actually power the business. That pattern yields pockets of efficiency, but it does little to raise the collective intelligence of the team or increase throughput on what matters most.

Putting AI at the center is not about “using AI more.” It’s about redesigning how work happens so AI shows up in the moments that shape clarity, alignment, decisions, and follow through. That means explicitly inviting AI into the room—not just to send the recap later, but as a seen and understood participant in the meeting arc. The shift is cultural as much as it is technical: moving from “What can I do faster alone?” to “What can we do better together—with AI as a teammate?” When you do that, you convert isolated wins into compounding outcomes that are visible across the system.

Think of this month as your strategic reset. Which rituals served you in the past but now hold you back? Which decisions routinely stall? Where does work bottleneck across roles or functions? Use those questions to identify places where AI can be designed in from the start—so it supports how you diverge, synthesize, converge, and decide. If the holidays tend to be a season of gifts, the gift you can give your future self is a deliberate redesign: AI-centered practices that create speed with quality and enable momentum you can feel.

Make AI a visible co-facilitator across the arc

The simplest way to re-center AI is to thread it through the full arc of a session—open, explore, decide, close—instead of sprinkling it into isolated moments. In the opener, invite participants to pair safely with AI. A prompt like “Ask AI to generate three provocative ‘what if’ questions about our purpose today—keep one that expands your thinking” primes both curiosity and comfort. When you normalize AI’s presence early, the team spends less energy on whether AI belongs and more on the quality of the work you’ll do together.

During divergence, let humans generate the raw ideas and let AI extend the option space: reframes, constraints, adjacent patterns, and “non-obvious” complements. As energy naturally shifts toward convergence, ask AI to produce a first synthesis—short, imperfect, and testable. When an AI-generated synthesis is on the table, people react faster and more concretely: “We can live with these parts, but not those.” That reaction accelerates prioritization and brings hidden misalignments into the open. Your job as a facilitator is to toggle the modes—solo-with-AI, small-group-with-AI, humans-only—and make those transitions visible so learning compounds.

Close with intent. A strong closer doesn’t just capture what happened; it evaluates how you worked with AI. Try, “What did AI do today that saved us time or improved quality? What should we ask it to avoid next time? What guardrails do we need to add?” Verifying AI summaries live, while the group can correct and clarify, prevents drift and creates a shared memory. Over time, the team will feel the difference: AI is no longer a shadow tool; it’s a visible collaborator that helps you open, expand, pattern, and decide.

Decide how to decide with AI

Where teams lose the most time isn’t in generating ideas; it’s in making decisions. Endless loops, ambiguous thresholds, and unclear ownership sap energy. AI can help here—if you design the decision rules. Start by choosing one recurring decision that often creates churn (e.g., prioritizing backlog items, approving experiments, selecting messaging). Ask AI to propose three viable options with explicit trade-offs and risks, then use a consent-based method to move. Consent beats consensus when speed and learning matter because it asks, “Is this safe to try now?” instead of “Does everyone love it?”

Design an escalation path before you decide: when does human judgment override AI-suggested options; who breaks ties; what evidence triggers a revisit? Ask AI to draft that “decide how to decide” canvas, then tune it as a team. You can further improve momentum by capturing objections in context. Instead of archiving dissent, structure it: What threshold of evidence would resolve this objection? What signal would confirm a risk is materializing? Feed those conditions into your AI memory so it knows when to surface a check—preventing unnecessary re-litigation while honoring new learning.

Finally, draw the line on where AI must never decide alone. Ethics, safety, brand integrity, people decisions—name the categories that require human ownership. That act clarifies roles and builds trust. Then define the inverse: Where should AI always propose first, so humans can accelerate judgment? When you codify both, decision-making becomes transparent and repeatable. You move faster not because you cut corners, but because the lanes are clear and the work of deciding is designed.

Agreements that build trust with humans and AI

If AI is going to sit at the center, it deserves formal working agreements—just like any teammate. These are short, visible norms that define boundaries, transparency, and shared responsibilities. They protect against two extremes you’ll likely find in any room: over-trusters who accept AI output without scrutiny and under-trusters who refuse to engage. Clear agreements pull the team into the productive middle, where AI accelerates and humans ensure quality.

Start small and make it living. Define what you will disclose and when (“Call out where AI contributed,” “Note the model or tool when relevant,” “Flag data sensitivity”), what you will verify every time (“We always review AI summaries live,” “We validate references, quotes, numbers”), and what you will avoid (“No AI generation on sensitive HR matters,” “No autonomous approvals”). Include bias checks in your openers—simple prompts like “Ask AI to generate counter-arguments from diverse perspectives” or “Scan for missing stakeholders.” Add a consent renewal check each month: “Are we still comfortable with how AI shows up in our work? What needs to change?”

Treat these agreements as pop-up rules that evolve as you learn and as the tools improve. Post them in the room or at the top of your collaborative doc. Invite the whole team to co-author and revisit them monthly. The act of co-creating and refreshing agreements builds trust, creates psychological safety, and reduces risk. It also sends a clear signal to your organization: AI here is not a stealth add-on—it’s an explicit collaborator governed by shared norms.

Redesign whole workflows not isolated prompts

The biggest gains happen when you stop sprinkling prompts and start threading AI through end-to-end workflows. Pick one journey that matters (e.g., discovery to delivery, feature rollout to customer comms, incident to learning review), map the gates, and design AI invitations at each gate. Replace ad hoc “someone remembers to prompt” with structured moments: AI drafts a brief to react to; AI proposes test conditions; AI synthesizes stakeholder quotes; AI surfaces pattern risks; AI produces the first pass of the decision memo. None of this removes human accountability; it changes where human attention is most valuable.

Blueprints help you see the gaps. A quick service blueprint or journey map reveals where work crosses silos, where it stalls, and where people repeatedly rebuild context from scratch. That’s where AI can remove friction: creating living memory that recurs at each gate, sparking first drafts that the team can critique, highlighting dependencies you might miss. These are not “set-and-forget” automations running in the background; they are deliberate, in-the-room invitations that elevate the quality of collaboration while the team is together.

Prototype a threaded flow you can test in two weeks. Give it a visible name so the team can reference it (“Release Flow 1.0”). Pause an old ritual while you test, and watch which gaps emerge without it. Resist the urge to recreate the ritual—solve for the gap instead. Run a retro at the end and ask, “Where did AI add speed without sacrificing judgment? Where did it distract? Which gate needs a new invitation?” That cycle—prototype, run, retro, tune—compounds quickly and makes AI-centered work feel real, not theoretical.

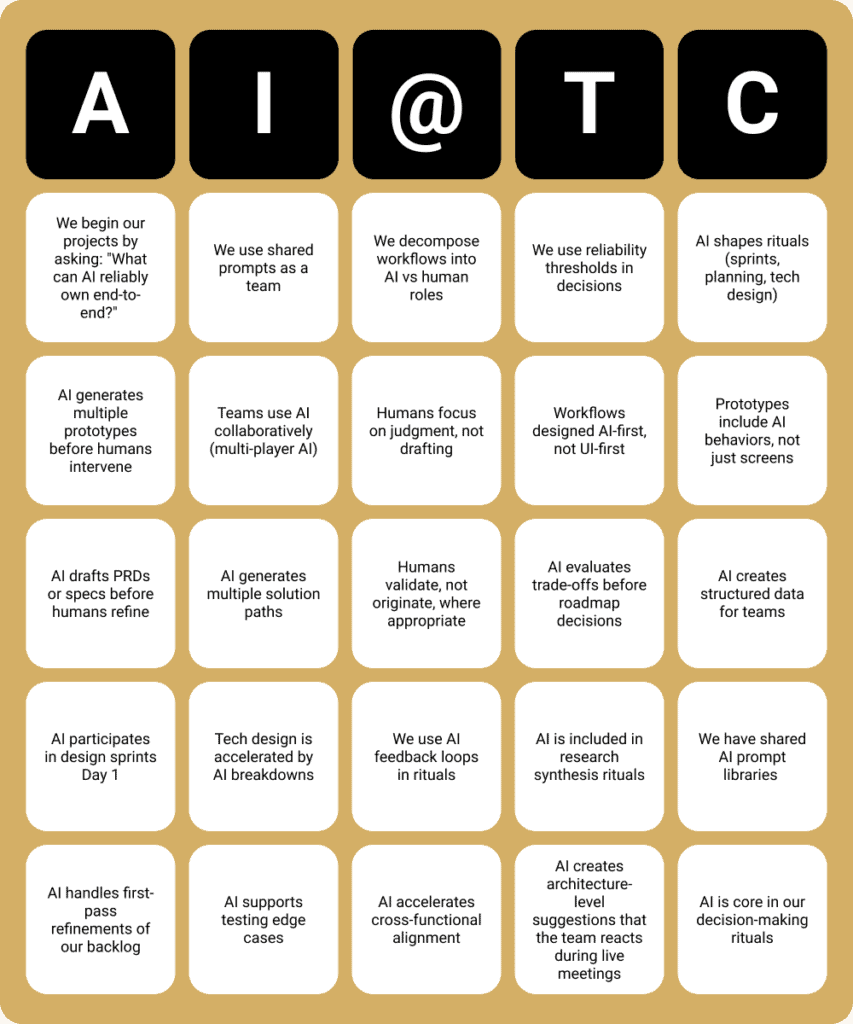

Activity of the Month AI-at-the-Center Bingo Diagnostic

To make the shift from edge use to center use tangible, run AI-at-the-Center (AI @ TC) Bingo with your team. It’s a hard-mode diagnostic masquerading as a playful game. Each square represents a concrete behavior—AI drafting specs, shaping rituals, generating prototypes, supporting decision-making, producing live synthesis, capturing objections, or maintaining living memory. The rule is simple and strict: mark only what is consistently true weekly. Aspirations and one-off experiments don’t count. That constraint makes the results honest, and honesty reveals where you really are on the maturity curve.

Run it as a fast, focused session. Start with a check-in that frames AI as a co-facilitator, not a mandate. Distribute the card (digital or printed), and give individuals a few minutes to mark their practice. Then compare patterns in small groups and as a whole. Where do you cluster at the periphery—personal productivity, transcription, occasional ideation? Where are there blank rows in the center—decision rules, consent methods, role clarity, living memory? Use the scoring guide to place yourselves on the spectrum from “AI at the Periphery” to “AI at the Center,” and normalize the result. Most teams discover they are earlier in maturity than they assumed. That’s a feature, not a bug; it creates a shared starting line.

Turn the snapshot into action. Choose one to three gaps to prototype in January. For each, define a visible artifact that will signal progress: a decision rule canvas, a weekly AI check-in, a living agenda template, or a workflow blueprint. Be thoughtful about who is in the room for the diagnostic—invite adjacent roles (ops, legal, data, customer success) to get a fuller picture and avoid blind spots. Set expectations upfront to reduce performative responses: “We mark only what’s truly weekly in our current practice.” Watch the AI-at-the-Center Bingo Diagnostic video for a quick walkthrough, and then schedule your session now while the year-end reflection energy is high.

Turn reflection into January momentum

Reflection is valuable only if it converts to habit. Translate your December insights into operating rhythms you can see on the calendar. Start with one weekly ritual that anchors AI at the center—for example, a 25-minute Monday “AI Enablement Standup” where each team member names one place AI will draft first, one place AI will synthesize live, and one decision where AI will propose options. Layer in a monthly agreement review to refresh guardrails, renew consent, and adjust bias checks. Consider a quarterly redesign sprint focused on one workflow—prototype, measure, and share what you learned with the broader org.

Build machine memory plus human judgment into your closers. Use AI to produce a concise, decision-forward summary while you’re still in the room, then verify as a group. Document objections with thresholds and next checks. Feed forward the summary into the next agenda so you don’t rely on imperfect recall. Choose a simple template to house decisions, context, and learnings—something your team will actually use. Establish a review cadence that keeps insights alive: weekly review for open decisions, monthly scan of agreement health, quarterly synthesis of what changed because of your AI-centered experiments.

Measure your momentum without micromanaging. Define two or three outcome signals that matter (reduction in time-to-decision, fewer re-opened debates, more cross-silo throughput, clearer accountability). Balance those with boundary checks that protect ethics, equity, and brand trust. Start small but start now: schedule one AI-at-the-Center Bingo session, pick one decision to move to consent with AI-generated options, and prototype one threaded workflow. Then tell us how it went—your stories help our community learn faster together.

Closing and call to action

If this year taught us anything, it’s that isolated use of AI by individuals yields isolated benefits. The organizations that will see meaningful ROI in 2025 will be the ones that put AI at the center—visible, designed-in, and co-facilitating the work that shapes results. That shift is not a top-down mandate. It’s a collaborative exploration where teams redesign rituals, clarify roles, codify decisions, and build living memory. It’s multiplayer AI, sitting in the room, helping us open the option space, converge faster, and decide with more clarity and less churn.

As you wrap December and look toward the new year, choose action over aspiration. Run the AI-at-the-Center Bingo Diagnostic. Draft your first “decide how to decide” canvas. Co-create three working agreements that will build trust between humans and AI. Prototype one threaded workflow you can test in two weeks. Put the cadence on your calendar now—commit by schedule, not by enthusiasm.

We’re here to help you make it real. Want the AI @ TC Bingo card, the scoring guide, and the activity video? Ready to bring a Voltage Control facilitator in to co-design your January flow or to run an AI-centered redesign sprint with your leadership team? Curious about integrating these practices into your Facilitation Certification journey? Reply to this newsletter or reach out to our team and we’ll get you everything you need. Let’s make 2025 the year your team moves AI from the edges to the center—and feels the difference in every meeting, every decision, and every outcome.